How Xgrid Built a HIPAA-Aligned AI Health Chat with Temporal and Vertex AI

Executive Summary

Xgrid partnered with an early-stage healthcare startup to deliver a medical AI chat experience with enterprise-grade reliability at startup speed. The product depends on safe responses, consistent context, and low-latency generation while handling sensitive health data. Xgrid made Temporal the orchestration backbone and Vertex AI the model layer, delivering a production-ready system that is resilient, auditable, and designed for HIPAA-aligned operations from day one.

The Problem: Medical AI at Startup Speed

The client needed an AI health assistant that could serve real users quickly, with a tiny team, and without sacrificing safety or reliability. Xgrid was brought in to design and build the system from scratch as a greenfield project, including the orchestration model, data flow, and operational guardrails. The solution also had to operate within medical data constraints while integrating external AI services that can experience latency spikes and rate limits.

Product Goals

Xgrid aligned with the client on a clear set of startup-friendly goals:

- Provide safe, grounded medical responses with guardrails and disclaimers.

- Preserve conversation context reliably across sessions.

- Stream responses in real time for a responsive user experience.

- Recover automatically from transient failures and provider rate limits.

- Maintain a verifiable audit trail for HIPAA-aligned operations.

- Keep operational complexity low for a small team.

Discovery: Understanding the Constraints

Xgrid validated the core requirements and the areas where reliability would be most fragile. Because this was a greenfield build, those constraints informed every design decision before the first workflow was written.

Infrastructure Assessment

The baseline architecture needed to span GCP services, real-time chat APIs, and long-running workflows. Key gaps Xgrid identified early:

- State durability was missing. A basic request-response model could not protect long-running AI calls from restarts or network failures.

- External dependencies were brittle. Vertex AI calls can fail on rate limits, timeouts, or transient errors.

- Observability was thin. Xgrid needed strong, end-to-end tracing across orchestration, AI calls, and storage updates.

- Compliance needed an audit trail. Xgrid required a system that could prove what happened and when, not just log it.

Application Architecture Analysis

The application needed to coordinate multiple steps per user query:

- A guardrail pass to prevent unsafe or non-medical requests.

- A retrieval step to ground answers with citations.

- A synthesis step that can stream partial output.

- A final audit and publish step with disclaimers.

Without orchestration, these steps would be hard to retry, debug, or evolve safely.

Greenfield System Design Considerations

Starting from scratch allowed Xgrid to define the system boundaries and failure modes upfront:

- Latency budgets: streaming responses had to feel real time even when upstream AI latency fluctuated.

- Durability and state: session history had to survive retries, restarts, and partial failures.

- Safety by default: guardrails needed to be enforced inside the workflow, not just at the UI edge.

- Secure data flow: sensitive inputs required PHI checks before and after model calls.

- Operational simplicity: a small team needed strong observability without complex infra.

- Scalable concurrency: activity throttling and backoff were required to handle rate limits.

Solution Architecture: Why Temporal Was the Right Backbone

After evaluating several orchestration patterns, Xgrid selected Temporal because it provided durable execution, first-class updates, and a clean separation between workflow logic and external side effects.

Temporal’s Core Advantages for Startup Workflows

- Durable execution keeps conversational workflows resilient to crashes, deploys, and intermittent outages.

- Update-driven APIs allow the chat endpoint to append user messages without restarting workflows.

- Signal-based cancellation enables instant stop for in-flight streaming generation.

- Auditability by design gives a full execution history that supports compliance requirements.

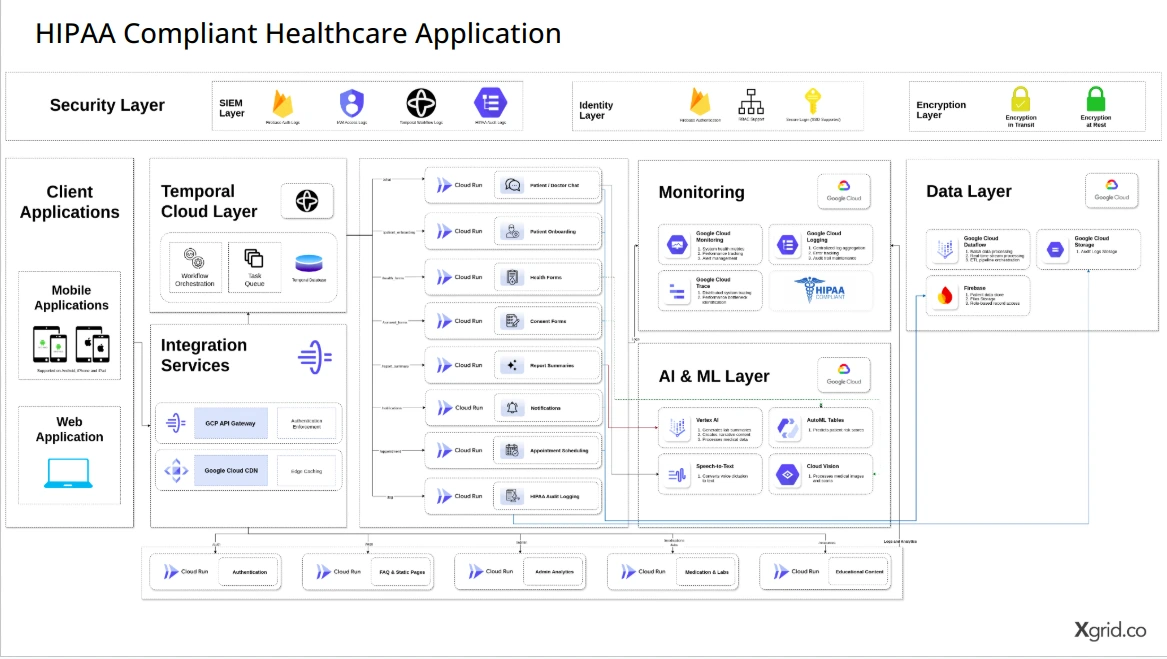

Cloud Architecture Strategy

Xgrid designed a GCP-first architecture while keeping the Temporal layer portable:

- Orchestrator API: Receives chat requests, enforces API key access, and routes updates to Temporal.

- Temporal Cluster: Hosts the workflow state and deterministic execution history.

- Workers: Run activities that call Vertex AI, run guardrails, and write to Firestore.

- Vertex AI + Search: Provides Gemini generation and RAG retrieval.

- Firestore: Stores conversation state and streaming message updates.

Implementation Deep Dive

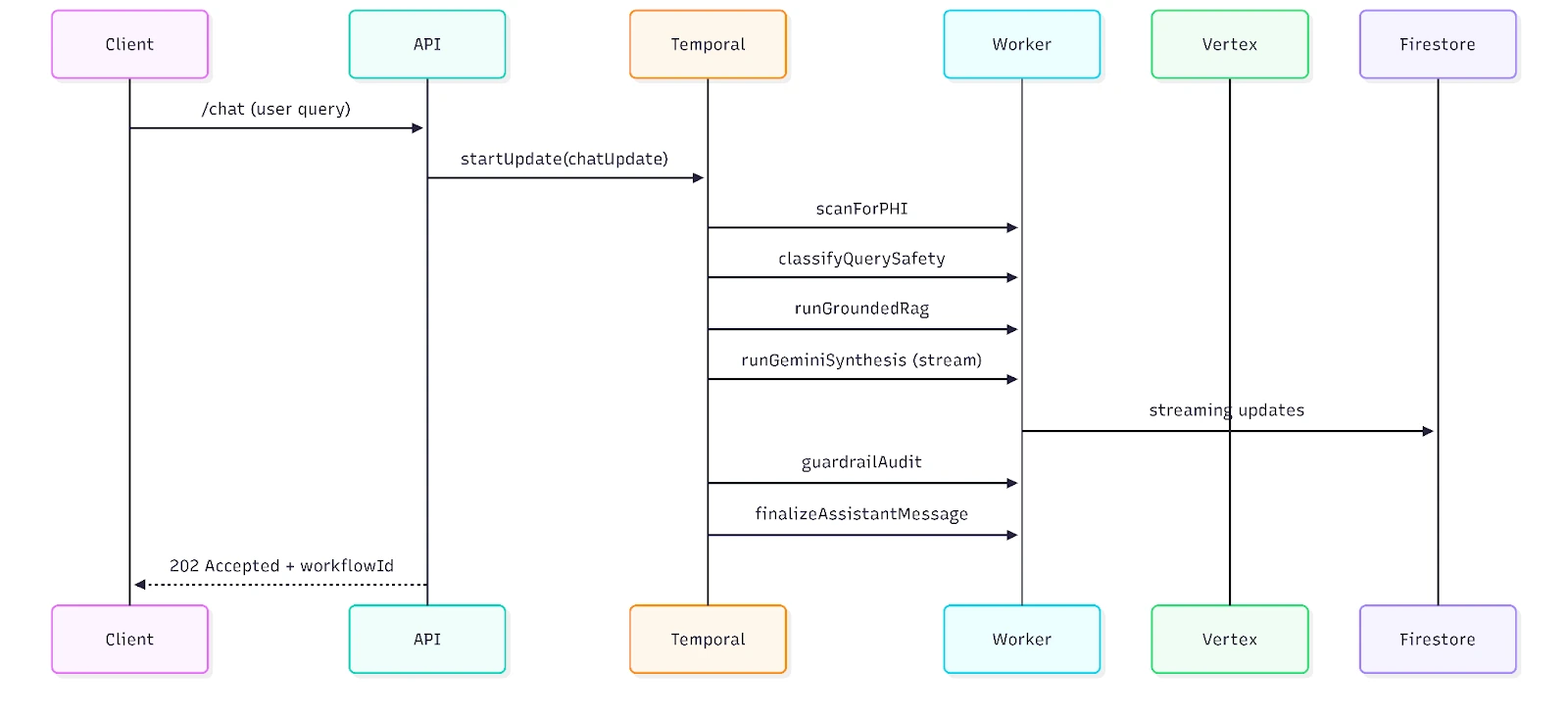

Phase 1: Workflow Contract and Update APIs

Xgrid modeled each chat session as a single long-lived workflow. The API uses chatUpdate to append user input, and each update runs inside a bounded timeout to prevent runaway execution.

Phase 2: Activity Design and RAG Grounding

Xgrid decomposed the workflow into activities aligned with risk boundaries:

- PHI scan before model calls to block sensitive data early.

- Safety classification to enforce medical-only and sensitive-topic policies.

- RAG retrieval using Vertex AI Search to build citations.

- Synthesis using Gemini with streaming support to Firestore.

- Guardrail audit after generation to sanitize and append disclaimers.

This structure keeps model calls isolated, retriable, and observable.

Phase 3: Security and HIPAA Alignment

Xgrid implemented workflow-enforced controls to support HIPAA compliance goals:

- Pre- and post-generation PHI checks prevent sensitive data from entering or leaving the model layer.

- Deterministic audit trails in Temporal provide a reliable record of every decision and output.

- Scoped API access via API keys and restricted endpoints limits unauthorized entry.

- Config-driven guardrails keep policies consistent across sessions and environments.

Temporal’s workflow history provides the audit trail needed to demonstrate how each decision was made, which is a core HIPAA compliance requirement.

Phase 4: Testing Strategy

Xgrid built confidence with layered tests:

- Workflow tests validate update handling, cancellation, and timeout behavior.

- Activity tests mock Vertex AI and Firestore to validate error handling paths.

- Guardrail tests verify PHI detection and disclaimer behavior across edge cases.

Phase 5: Monitoring and Observability

Xgrid embedded operational visibility into the system:

- Workflow histories provide a complete trace of each user interaction.

- Structured logs around Vertex AI calls expose latency and rate limit behavior.

- Firestore streaming updates make UI progress visible without polling.

Results: Reliability and User Experience

Even at early-stage scale, the client benefited from orchestration-driven reliability.

Reliability Improvements

- Fewer failed requests: transient Vertex AI errors are retried or surfaced cleanly.

- Consistent state: conversations survive restarts and can be rehydrated safely.

- Controlled cancellation: users can stop generation immediately without corrupting state.

Performance Gains

- Lower perceived latency: streaming responses update Firestore every second.

- Better throughput: activities run independently with controlled concurrency.

- Smaller failure blast radius: each step is isolated and recoverable.

Operational Excellence

- Faster debugging: Temporal histories make it easy to trace user-level issues.

- Simpler upgrades: workflow code can evolve without breaking active sessions.

- Lower on-call load: failures are predictable and handled automatically.

Technical Architecture Details

Cloud Architecture Overview

- API Gateway and Orchestrator: validate API keys, normalize inputs, and start workflow updates.

- Temporal Cluster: owns workflow state, update handling, and deterministic logic.

- Workers: execute activities, connect to Vertex AI, and write to Firestore.

- Vertex AI Search: provides citations for grounded responses.

- Vertex AI Gemini: performs synthesis and streaming generation.

- Firestore: persists conversation history and streaming message state.

Temporal Workflow Execution Path

Data Security and HIPAA Alignment

- PHI gating: sensitive inputs are blocked before model calls, and unsafe outputs are rejected before finalization.

- Guardrail policies: enforce medical-only scope and sensitive-topic refusals.

- Audit trail: Temporal history provides a verifiable record that supports HIPAA compliance requirements.

- Data minimization: only required context is sent to model calls, and streaming writes are throttled.

Lessons Learned and Best Practices

Implementation Insights

- Start with a single workflow per user session to keep state consistent.

- Use updates for high-frequency interactions instead of spawning new workflows.

- Keep activity boundaries aligned with risk and external dependencies.

Operational Best Practices

- Treat guardrails as first-class workflow steps, not UI-only checks.

- Monitor retry behavior to tune backoff and rate-limit handling.

- Use Firestore streaming updates to improve user experience without extra services.

Looking Forward: Scaling the Platform

The foundation now supports additional features without rewriting the core.

Future Enhancements

- Multi-region deployment: improve resilience and reduce latency for new markets.

- Broader modalities: expand to live audio and multimodal inputs.

- Policy versioning: evolve guardrails safely while keeping historical audits intact.

Conclusion

Xgrid delivered a greenfield medical AI platform with the reliability and auditability typically reserved for much larger organizations. By enforcing safety checks inside the workflow, orchestrating multi-step AI calls, and maintaining durable conversation state, the client now has a system that is fast, resilient, and HIPAA-aligned. The result is a platform that can grow with user demand while protecting patient trust. Xgrid’s Temporal consulting services can help your team achieve similar reliability and compliance in AI-driven workflows.